Web search at the BBC: Part 7 - Shake and crawl

I have been writing a series of posts looking at what I remember of the development of the BBC's web search service, which was recently closed. The service was not always well received internally, and it was certainly unpopular in some quarters outside of the BBC.

During the time that the BBC offered web search it used four different technology partners. The initial contract was with Google, and they were replaced as search provider by Inktomi. Inktomi were subsequently purchased by Yahoo!, and in recent years the BBC had been using results from Microsoft's Live Search.

The BBC did for a while have a crawler out on the Internet calling itself 'BBCi Searchbot', but this was not a serious attempt to build the BBC's own web index. Instead, this was part of an early warning system to warn editorial staff if one of the externally recommended websites had began to generate a 404 error, or had changed significantly.

The move from Google to Inktomi was controversial - as Pandia reported at the time:

"Last year the British BBC launched their own publicly owned search engine based on the Google database. As reported on Webmaster World, they have now switched to Inktomi based search results. It is not clear why they have done this. The move will certainly make the BBC search engine a less attractive alternative. "

Coping with the 'Google Dance'

As part of the switch to Inktomi, they also provided the crawl and dataset for the BBC's site search. For a while the BBC had been using a sub-set of Google's UK index to provide site search, which showed up some of the weaknesses of Google's product at that time.

By switching to Google from Muscat, we thought that all our site search miseries would be over, but it just exposed a different set of problems. It revealed that the Google crawl of bbc.co.uk, one of the biggest websites in the UK, was not as deep as people expected. It also showed that the index wasn't always fresh.

At that stage in SEO circles there was a phenomena known as 'the Google dance'. This was when a new version of the Google index was pushed out to various data centres. It meant that you could be searching for keyword 'x', and get different sets of results depending on which set of Google machines were serving your results. Each update was christened, hurricane style, after names that steadily progressed through the alphabet.

The index updated around every 30 days. This was absolutely no good as a site search if you'd just launched a programme related site the day after the Google index had refreshed. Especially if it was for a programme that was going to transmit in the next couple of weeks. It meant your content would not appear on bbc.co.uk results until after the show had been and gone - and there was no iPlayer catch-up in those days. The unmissable was totally missable as far as site search was concerned.

Rankings controversies

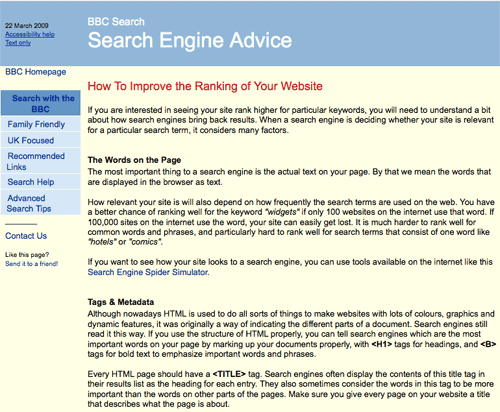

Of course, each time the technology partner or implementation changed, so did the rankings. One of the things that the BBC's web search tried to do was be educational to UK webmasters about how search engines worked, and what they could do to improve their rankings. The BBC published some guidelines for webmasters, and I also had several comprehensive canned responses to the emails we regularly received asking for advice on how to improve rankings.

It is an educational tradition that the BBC has continued, with Michael Smethurst recently publishing on the BBC Radio Labs blog a very comprehensive and brilliant overview of usability, accessibility and search engine optimisation from an information architect's perspective - "Designing for your least able user"

I also had to deal with a fair number of people who were complaining about how their sites were represented in the BBC's search engine.

Some were more justified than others.

There was a huge furore about search impartiality at launch when searching for 'Virgin Radio' caused the BBC to recommend at the top spot 'BBC Radio' instead. I've written about this on currybetdotnet before, not, it must be said, that I've ever totally convinced James Cridland, one of the people complaining at the time.

Essentially as part of the algorithm, if the system didn't have a recommendation, it would recommend something on a related node in order to show that it had understood the kind of thing you were looking for. This worked fine if you searched for 'Seth Johnson' and the related best link was 'Leeds United', or if you searched for 'neptune' and the related link was 'BBC Science - The Planets'. Not so well if you searched for a commercial radio station, and the recommended link was a TV Licence funded rival for audience share!

Other people remained convinced that in some way the BBC was discriminating against them by deliberately suppressing their site in web search rankings. This was a constant source of frustration to me, as very often, there was no dissuading them, even if I could painstakingly demonstrate that it was their own HTML or hosting arrangements that were causing them to rank poorly in a UK-specific search engine, and supplied the URL to show that Google or Inktomi were ranking them in exactly the same way. One chap even still has a dedicated web page "Bug-Brain Check-in" about how BBCi were persecuting him.

Next...

I'll be wrapping this series up next week with two further posts, looking at the relationship between search and editorial, the plans for syndicating BBCi Web Search, the way the results were kept pr0n free, and how some of the BBC's future plans for making content findable and external linking are rooted in ideas developed in the early 2000s.